|

| image from www.businesscontinuityjournal.com |

In looking at educational psychology literature, and is becoming more widely known through research on positive effects of gaming, experiencing failure supports adaptive learning. Rohrkemper and Corno (1988) highlight the problematic duality of failure versus success, where failure is always bad and success is always good. This in fact is not true, where constant success can be detrimental and failure can improve performance (that is, learning from failure). Focusing on how students think, rather than what they know, is one step in the right direction, along with modeling adaptive behavior, and teaching students to understand that tasks and learning can be malleable. In library instruction, this reminds me of what Pegasus Librarian (I believe?) mentioned in regards to providing students with a "dirt-view" of research (I can't find this post, I'm thinking it could have possibly been an episode on Adventures in Library Instruction). But basically, where we show students research takes work and builds on failure, and it's almost hilarious when we show students practiced, perfect searches because that is not how research works at all. Kluger and DeNisi (1996) support this notion of learning through failure by arguing that after doing an enormous meta-analysis of feedback interventions research, the conclusion is that the feedback literature is inconclusive and highly variable based on situations and learners involved. They explain that learners are most successful in learning through discovery, rather than feedback, particularly controlling feedback (ahem, grades).

Brownell (1947) advocated for teaching meaning in arithmetic, typically a rote, "tool subject." You'd think the argument of teaching meaning would be quite clear, especially in 2013, but this debate continues in some ways. We can see the shift in information literacy, it seems there is more agreement now to move away from navigation and clicks ("bibliographic instruction") to teaching students a more holistic understanding of research in "information literacy." Barbara Fister, as always, is very eloquent in how she explains the importance of this. But really this is another avenue to instill resiliency in students, by focusing on higher order thinking (though, higher order thinking is not always appropriate in every context), we truly are looking toward students' process rather than having interest only in final product. As Brownell explains, teaching meaning provides a greater context for students to find value in the particular subject being taught. With all the difficulty librarians can have regarding one shots (this model could/should change) in building connections with students and improving motivation for students to learn aspects of the research process, providing deeper knowledge about why, and not just what and how, can improve the learning environment.

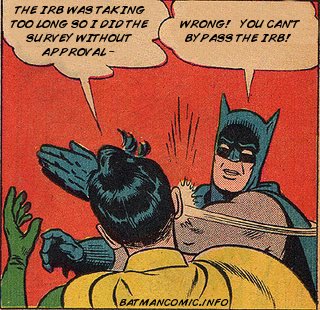

I was going to next talk about how to provide successful feedback, because it is important, but to avoid making this post so long that no one actually reads it, I just want to wrap up with whether in a class (credit-bearing, one-shot) or through more auxiliary approaches, libraries should be places for students to build grit and resiliency through exploring failure. We talk about how orientations are important for students to develop a social connection and feel comfortable somewhere on campus, and this is a very important aspect of retention, but these safe spaces should also provide opportunities for students to take safe risks and learn how to adapt to failure. This doesn't necessarily mean libraries need to gamify the whole library or offer badges as a panacea for solving student retention or student motivation concerns, but these are examples of methods that could prove useful. Setting up other opportunities in the library for students to test out ideas are ways in which to draw them in and instill adaptivity. Hopefully they are also getting opportunities for safe failure in their campus-wide courses, but it's certainly not a guarantee. Libraries should think about how we can provide opportunities for safe risk in a variety of ways, whether it's instruction, programming, collections, or UX. It's one step in figuring out how we can support student retention initiatives on campus and demonstrate value.

--

Brownell, W. A. (January 01, 1947). The Place of Meaning in the Teaching of Arithmetic.The Elementary School Journal, 47, 5, 256-265.

Kluger, A. N., & DeNisi, A. (January 01, 1996). The Effects of Feedback Interventions on Performance: A Historical Review, a Meta-Analysis, and a Preliminary Feedback Intervention Theory. Psychological Bulletin, 119, 2, 254-284.

Rohrkemper, M., & Corno, L. (January 01, 1988). Success and Failure on Classroom Tasks: Adaptive Learning and Classroom Teaching. The Elementary School Journal, 88,3, 297-312.